AfNOG 2011, Part 1

I'm in Dar es Salaam, Tanzania for AfNOG 2011. I arrived on Wednesday morning at 7am (on the red-eye flight from London Heathrow) and I'm here until Tuesday 7th June.

Until now we've been setting up the venue. We've been super busy, working until midnight every night so far. We had to run our own cables, quite a lot of them (over 600 metres).

Running them through the windows was tricky, since we needed to be able to close them for security, and to allow the air conditioning to work. Someone (Alan?) came up with the genius idea of using tough palm leaves wrapped around them to protect them as they pass through the narrow gap between window panes. Bio-degradable trunking!

To cope with the power failures, Geert Jan built a monster Power-over-Ethernet injector to power the wireless access points in each room and keep the wireless network running.

The training workshops start tomorrow, Sunday 29th May, with the Unix Boot Camp, an introduction to Unix and the command line. We expect that many of the participants will have little experience with Unix, as has been the case in previous years. These free tools have immense benefits, both for us running the workshops and for the participants when they return home. But they are very different to the Windows environments that the participants are most familiar with. Without basic skills, they would struggle and hold back the group during the rest of the workshops.

I'm not involved in the boot camp, but after it finishes, we move straight into the main tracks, which last for five days. This year we have some new tracks: Network Monitoring & Management, Advanced Routing Techniques and Computer Emergency Response Team training.

We have also cancelled the basic Unix System Administration track (SA-E) this year, as it has finally been localised to most African countries, and therefore people have the opportunity to attend it locally at lower cost and build local communities. However, this leaves us with nowhere to cover more advanced systems administration techniques, which are some of my favourite topics, including:

- virtualisation (desktops, servers and thin clients, VirtualBox, Xen, KVM, jails, lxc)

- system imaging (ghost, snapshots)

- backups (snapshots, Rsync, Rdiff-backup, Duplicity)

- file servers (NFS, Samba, sshfs, AFS, ZFS)

- virtualised storage (iSCSI, ATAoE, Fibre Channel, DRBD)

- cloud computing (Amazon and Linode virtual servers, scripting and APIs)

- cluster computing (Mosix, virtual machine host clusters)

- DHCP (for network management and booting)

- network security (firewalls, port locking, 802.1x)

- wireless networks (planning, monitoring, troubleshooting, WEP and WPA, 802.1x authentication)

- Windows domains and security (including Samba 4)

If participants show enough interest in these topics, they could be added in future. I think it's unfortunate that the course is arranged into week-long tracks rather than half-day or one-day sessions from which people could pick and choose, Bar Camp style. That would make it much easier for people to run sessions on many new topics.

In the past this would have been difficult, because we provided desktop computers for participants. It used to take us 3-4 days to set up 80-odd desktop PCs with customised FreeBSD installations. We've noticed that more and more people are coming to the workshops with their laptops, and this time we've made a big effort to shift from dedicated to virtual platforms, to reduce setup time and costs in future.

The hardest track to do this for, in my opinion, was Scalable Services English (SS-E), the one I'm working on. We were all pretty much agreed to stay with desktop PCs this year, making us the only track to do so. But when we arrived, we discovered that the mains power situation here is pretty awful. On Wednesday we had four power failures. We only have five UPS, not nearly enough to protect every desktop.

For participants with laptops, they effectively have their own built-in UPS. If we give them virtual machines to work with, then we only have to protect the hosts. We can keep those in the NOC (Network Operations Centre), where the UPS are, so they'll be protected for around 15 minutes of any power outage, which we have to hope will be enough for the hotel to start their generator.

Some participants will probably forget their laptops, so we'll provide them with desktops, but we have no way to UPS them. These desktops will be set up with FreeBSD, as in previous years.

We rented 80 machines from a local company. Some had Windows, in varying states of repair, some had no operating system installed. We decided to use some of these desktops as hosts for the participants' virtual machines.They only had 2 GB of RAM each, but since we had plenty, we cannibalised eight others for their RAM to upgrade our machines to 4 GB each.

We decided to use VirtualBox for the virtual machines. It's free, open source, can host on all major platforms (Windows, Mac, Linux and even FreeBSD), has a nice GUI and a command-line automation tool, supports bridged networking easily, and is relatively fast and efficient.

We configured the master (that we'll copy onto the other machines) starting with the setup from last year. We then had to install VirtualBox and build our first virtual machine inside it. Then we discovered that the virtual machine was unable to access the network in bridged mode. Packets sent by the virtual machine we simply never sent by the host. We needed to use bridged mode so that participants could run services on their machines simply by installing them. without requiring extra configuration on the host.

We had no Internet access for most of that day, because all three of our redundant providers were down for different reasons. Eventually we managed to use Geert Jan's 3G dongle to get online and research the problem. We found that bridged networking doesn't work in the binary package of VirtualBox 3.2.12 that comes with FreeBSD 8.2, so we had to wait until Internet access was fixed to download 120 MB of software (ports updates and VirtualBox 4.0.8) like this:

pkg_add -r portupgrade portsnap fetch extract update portupgrade virtualbox-ose virtualbox-ose-kmod

This took a long time, as VirtualBox is a large piece of software which also required us to download and build a new version of QT, but eventually it succeeded and the problem was solved.

We decided to put only five virtual machines on each host. Sometimes we would have the whole class compiling software from ports, which would slow down all of them significantly. We will use six or seven servers to host 30-35 virtual machines. On the master host, we created five copies of our master virtual machine by copying its hard disk like this:

cd .VirtualBox/HardDisks for i in 1 2 3 4 5; do cp AfNOG\ SSE\ Master.vdi vm0$i.vdi VBoxManage internalcommands sethduuid vm0$i.vdi done

Then we created the virtual machines in the VirtualBox GUI and attached them to these new images. We needed to generate a new UUID for each disk image copy, using the undocumented sethduuid command above, otherwise VirtualBox would refuse to add the copies because it had a disk image already registered with the same UUID.

We could have created the virtual machines using the VBoxManage command as well, but it would have taken longer to work out how to use it than simply to create the five machines by hand. I later worked out the commands that we could have used:

cd ~/"VirtualBox VMs"

for i in {1..5}; do

echo $i

vmname=VM0$i

diskimage="$vmname/FreeBSD.vdi"

VBoxManage createvm --name "$vmname" --ostype FreeBSD --register

VBoxManage modifyvm "$vmname" --memory 256 \

--nic1 bridged --bridgeadapter1 bge0.219 \

--nic2 bridged --bridgeadapter2 bge0.$[50+$i] \

--vram 4 --pae off --audio none --usb on \

--uart1 0x3f8 4 --uartmode1 server /home/chris/"$vmname"-console.pipe

VBoxManage storagectl "$vmname" --name "IDE Controller" --add ide

cp VM01/FreeBSD.vdi "$diskimage"

VBoxManage internalcommands sethduuid "$diskimage"

VBoxManage storageattach "$vmname" --storagectl "IDE Controller" \

--port 0 --device 0 --type hdd --medium "$diskimage"

done

We named the images VM01 to VM05, which was important for running automated scripts on them. Then we configured VirtualBox to start them automatically at boot time, in headless mode, by adding the following lines to /etc/rc.conf:

vboxheadless_enable="YES" vboxheadless_machines="VM01 VM02 VM03 VM04 VM05" vboxheadless_user="inst"

We wrote a short script to help us apply the same command to all five virtual machines:

#!/bin/sh # script to control all five virtual machinescommand=$1 shift

for i in 1 2 3 4 5; do VBoxManage $command VM0$i "$@" done

This allows us to log into a machine and do things like:

./manage acpipowerbuttonto initiate a controlled shutdown of all five virtual machines./manage modifyvm --macaddress1 autoto generate new, random MAC addresses after cloning the host./manage startvm --type headlessto get the virtual machines running again (headlessly, independent of the GUI)

We knew that we wouldn't have space to attach monitors and keyboards to all the hosts, and we'd have to fiddle about with cables in the hot NOC room (without working aircon) if we needed access to their consoles, so we added the ability to log into them remotely using VNC and GDM. To do this, we had to install the VNC server:

pkg_add -r vnc

Which unfortunately doesn't come with the nice xorg loadable module that adds a built-in VNC server to the X server, making a fast and stateless remote control session possible. So we had to hack about with inetd, first by adding a service name with a port number to /etc/services:

vnc 5900/tcp

And then a service line in /etc/inetd.conf:

vnc stream tcp nowait root /usr/local/bin/Xvnc Xvnc -inetd :1 -query localhost -geometry 1024x768 -depth 24 -once -fp /usr/local/lib/X11/fonts/misc/ -securitytypes=none

This requires us to enable the XDMCP protocol in GDM, in order for VNC to communicate with it to present a GDM login screen. So we replaced the contents of /usr/local/etc/gdm/custom.conf with the following:

[xdmcp] Enable=true[security] DisallowTCP=false

And then restarted GDM:

sudo /usr/local/etc/rc.d/gdm restart

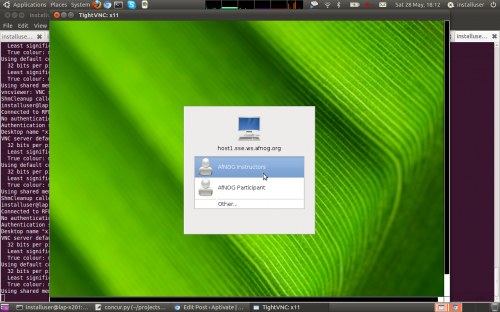

And checked that we could connect from another machine and got a login prompt:

vncviewer 196.200.217.128

Which did indeed give us a working login screen:

This method is very slow. I wanted to find a better way to access the guests, especially if their network configuration was broken. I tried to connect a host serial port to a pipe and then access that pipe from a shell command, eventually over ssh, in a similar way to the text-only console offered by Xen (xm console). The above VBoxManage commands set up a pipe in my home directory, and I wrote the following short script to access it:

#!/bin/sh set -x echo "Console for $USER" nc -U /home/chris/$USER-console.pipe

I created user accounts for each virtual machine, with the same name, and set their shells to this script, so that when they log in, they would automatically be connected to the pipe. However I was unable to make it work well. In particular, because of incompatible terminal emulations, I was unable to run vi to edit configuration files in the guest. If you find a way around this, please let me know. I haven't tried it yet, but conman looks like it might be a good bet.

I spent a long time searching for the hidden VNC support in VirtualBox 4. It's undocumented (the manual only talks about RDP) and people on the IRC channel say that it doesn't exist, but it does, at least when starting the guests in headless mode. I added the following lines to /etc/rc.conf:

vboxheadless_VM01_flags="-n -m 5901" vboxheadless_VM02_flags="-n -m 5902" vboxheadless_VM03_flags="-n -m 5903" vboxheadless_VM04_flags="-n -m 5904" vboxheadless_VM05_flags="-n -m 5905"

And then, after starting the guests in headless mode, I could connect to these ports and access the virtual displays, much more conveniently and much faster than by shutting down the guests using VBoxManage and starting them again using the VirtualBox GUI.

We used multicast to image the six virtual machine hosts from the master. This took about three hours, so we left it running overnight.

In the morning we checked that the hosts had been imaged successfully by booting them with their newly installed images, and gave them unique hostnames (host1.sse.ws.afnog.org etc.) and IP addresses.

We used the manage script to reset the MAC addresses of the network cards of each virtual machine on each host:

for i in 128 129 130 131 132 133 134; do ssh 196.200.217.$i ./manage acpipowerbutton; done for i in 128 129 130 131 132 133 134; do ssh 196.200.217.$i ./manage modifyvm --macaddress1 auto; done for i in 128 129 130 131 132 133 134; do ssh 196.200.217.$i ./manage startvm; done

Since they were all configured for DHCP, we could have got their IP addresses from the DHCP server, but we wanted to give them a nice naming scheme, so we logged in to each one (using the console and the VirtualBox GUI) and assigned it a unique hostname and a static IP address.

We checked that we could log into each virtual machine remotely using the SSH keys that we'd installed, and then we shut down the hosts and moved them to the NOC.

Boot camp starts tomorrow, next door, but we still have to arrange our room.

We may have up to 37 people, our biggest class ever, in a room that's about eight metres on a side, so layout of the room is a real challenge.

I wanted to do something to facilitate working in groups, such as each table having four places (two each side) and with its long axis front-to-back. This was vetoed because participants would have to turn their heads to see the projected screen, and it might be hard for them to take notes as a result.

So we're going to have long, cramped benches instead. I think this is unfortunate, and I hope I can persuade people to try something more imaginative in future.

[...] are my notes from our setup for 2012, building on the virtualisation setup from last [...]